Cambricon(688256)

Search documents

赛力斯开启港股招股 募资净额超129亿港元 销量前9月降7.79%

Chang Jiang Shang Bao· 2025-10-31 06:39

Group 1 - GAC Group reported a loss of 3,643 yuan for every vehicle sold in the first three quarters, aiming for 2 million sales of its own brand by 2027 [1] - Greenland Holdings faced 1,344 new lawsuits in 20 days, with a debt-to-asset ratio of 89%, and its new business is still in the investment phase [1] - Chongqing Bank's net profit increased by 10%, but its middle-income dropped by 27.6%, and it was fined 2.2 million for violations in loan and investment businesses [1] Group 2 - Feilong Co. experienced a decline in both revenue and profit for the first time in three years, with a fundraising of 271 million for a project that has seen little progress in six months [1] - Yushu Technology is advancing its IPO with a name change, and its overseas business accounts for 50% of its operations, with accelerated product iteration [1] - CATL achieved a record profit of 200 million per day in the third quarter, entering a global expansion cycle, with Morgan Stanley optimistic about its prospects [1] Group 3 - Industrial Fulian reported positive performance and declared its first interim dividend, accumulating nearly 150 billion in profits and distributing 63.1 billion [1] - Estun faced 4.8 billion in interest-bearing debt, having sold assets twice to recover 340 million in funds [1] - GoerTek terminated a 10 billion acquisition, while investing 24.8 billion in R&D over five and a half years across multiple business lines [1] Group 4 - Agricultural Bank made a significant move by incorporating 192 institutions in Jilin, as state-owned banks push for "village reform" in the rural credit system [1] - Aier Eye Hospital's charitable operations faced scrutiny after being penalized for insurance fraud, with weak performance leading to a stock price drop back to six years ago, and 8.7 billion in goodwill concerns [1] - R&F Properties has accumulated over 16.3 billion in execution amounts, with a 19 billion acquisition of Wanda Hotels accelerating asset sales for liquidity [1] Group 5 - Cambrian Technology reported a profit of 1.6 billion in the first three quarters, an increase of over three times, with investor Zhang Jianping increasing his stake, resulting in a cumulative profit of 3.6 billion [1] - WuXi AppTec has reduced its holdings in WuXi AppTec by 64 billion through four transactions, with a workforce reduction of 6,529 over two and a half years, and CEO Li Ge's salary at 42 million [1]

集成电路ETF(159546)开盘跌0.31%,重仓股中芯国际跌0.40%,寒武纪跌0.99%

Xin Lang Cai Jing· 2025-10-31 04:46

Core Viewpoint - The Integrated Circuit ETF (159546) opened at 1.930 yuan, experiencing a slight decline of 0.31% on October 31, 2023 [1] Group 1: ETF Performance - The performance of the Integrated Circuit ETF (159546) is benchmarked against the CSI All-Share Integrated Circuit Index return [1] - Since its establishment on October 11, 2023, the fund has achieved a return of 93.26%, while the return over the past month has been -3.63% [1] Group 2: Major Holdings - Key stocks within the Integrated Circuit ETF include: - SMIC (中芯国际) down 0.40% - Cambrian (寒武纪) down 0.99% - Haiguang Information (海光信息) down 0.08% - Lattice Technology (澜起科技) down 2.63% - GigaDevice (兆易创新) down 0.43% - Haowei Group (豪威集团) down 0.79% - Chipone (芯原股份) up 0.77% - JCET (长电科技) down 0.72% - Unisoc (紫光国微) down 0.15% - Tongfu Microelectronics (通富微电) down 2.06% [1]

新易盛获融资资金买入近54亿元丨资金流向日报

2 1 Shi Ji Jing Ji Bao Dao· 2025-10-31 04:08

Market Overview - The Shanghai Composite Index fell by 0.73% to close at 3986.9 points, with a daily high of 4025.7 points [1] - The Shenzhen Component Index decreased by 1.16% to 13532.13 points, reaching a maximum of 13700.25 points [1] - The ChiNext Index dropped by 1.84% to 3263.02 points, with a peak of 3331.86 points [1] Margin Trading and Securities Lending - The total margin trading and securities lending balance in the Shanghai and Shenzhen markets was 24911.76 billion yuan, with a financing balance of 24732.7 billion yuan and a securities lending balance of 179.06 billion yuan, reflecting a decrease of 75.56 billion yuan from the previous trading day [2] - The Shanghai market's margin trading balance was 12657.39 billion yuan, down by 39.35 billion yuan, while the Shenzhen market's balance was 12254.37 billion yuan, decreasing by 36.21 billion yuan [2] - A total of 3456 stocks had financing funds for purchase, with the top three being Xinyi Technology (53.65 billion yuan), Zhongji Xuchuang (46.23 billion yuan), and Sunshine Power (36.47 billion yuan) [2] Fund Issuance - Four new funds were issued yesterday, including two mixed funds and two stock funds, all launched on October 30, 2025 [3][4] Top Trading Activities - The top ten net buying amounts on the Dragon and Tiger List included Jiangte Electric (27681.86 million yuan), Tianji Shares (20137.13 million yuan), and Guodun Quantum (16408.1 million yuan) [5] - The highest price increase was seen in Jiangte Electric with a rise of 9.98%, followed by Tianji Shares with a 10.0% increase [5]

人工智能三维共振支撑国产芯片及云计算发展,数字经济ETF(560800)盘中蓄势

Xin Lang Cai Jing· 2025-10-31 03:17

Core Viewpoint - The digital economy theme index has shown fluctuations, with specific stocks performing variably, while the government supports mergers and acquisitions in strategic emerging industries [1][2]. Group 1: Digital Economy Index Performance - As of October 31, 2025, the CSI Digital Economy Theme Index (931582) decreased by 1.44% [1]. - Leading stocks included Deepin Technology (300454) with a rise of 6.51%, while Lattice Technology (688008) led the decline with a drop of 7.24% [1][4]. - The digital economy ETF (560800) experienced a turnover of 1.41% during the trading session, with a total transaction value of 9.5176 million yuan [1]. Group 2: Market Trends and Government Support - The Beijing municipal government has issued opinions to support mergers and acquisitions aimed at promoting high-quality development of listed companies, focusing on strategic emerging industries [1]. - Key sectors for development include artificial intelligence, healthcare, integrated circuits, smart connected vehicles, cultural industries, and renewable energy [1]. Group 3: ETF and Index Composition - The digital economy ETF closely tracks the CSI Digital Economy Theme Index, which includes companies with high digitalization levels [2]. - As of September 30, 2025, the top ten weighted stocks in the index accounted for 54.31% of the total index weight, with Dongfang Fortune (300059) being the highest at 8.64% [2].

寒武纪的前世今生:营收行业十一,净利润第三,毛利率超行业均值近20个百分点

Xin Lang Cai Jing· 2025-10-30 23:33

Core Viewpoint - Cambricon Technologies, established in March 2016 and listed on the Shanghai Stock Exchange in July 2020, is a leading player in the AI chip sector in China, focusing on the research, design, and sales of AI core chips for various applications [1] Financial Performance - In Q3 2025, Cambricon achieved a revenue of 4.607 billion yuan, ranking 11th among 48 companies in the industry, significantly above the industry average of 2.912 billion yuan and median of 1.156 billion yuan, but still trailing behind the top two competitors, OmniVision and Jiangbo Long, with revenues of 21.783 billion yuan and 16.734 billion yuan respectively [2] - The net profit for the same period was 1.604 billion yuan, placing the company 3rd in the industry, with OmniVision and Haiguang Information leading at 3.199 billion yuan and 2.841 billion yuan respectively [2] Financial Ratios - As of Q3 2025, Cambricon's debt-to-asset ratio was 10.12%, down from 15.56% year-on-year and significantly lower than the industry average of 24.46%, indicating strong solvency [3] - The gross profit margin for Q3 2025 was 55.29%, slightly up from 55.23% year-on-year and higher than the industry average of 36.52%, reflecting robust profitability [3] Shareholder Information - As of September 30, 2025, the number of A-share shareholders increased by 52.13% to 62,000, while the average number of circulating A-shares held per shareholder decreased by 34.13% to 6,748.35 shares [5] Growth and Investment - Cambricon's revenue for the first three quarters of 2025 grew by 2386.38% year-on-year, with a net profit of 1.605 billion yuan, marking a turnaround from losses [6] - The company maintained high R&D investment, totaling 715 million yuan in the first three quarters, and inventory reached a record high of 3.729 billion yuan, indicating potential for accelerated demand [6] - A private placement raised 3.985 billion yuan for investment in chip and software development and to supplement working capital [6] Market Outlook - Analysts predict that Cambricon will benefit from the rapid growth in domestic demand for AI computing chips, with projected revenues of 6.396 billion yuan, 14.053 billion yuan, and 29.494 billion yuan for 2025, 2026, and 2027 respectively, alongside corresponding net profits of 2.095 billion yuan, 5.349 billion yuan, and 12.924 billion yuan [6]

AI驱动算力高增长 产业链公司“大丰收”

Shang Hai Zheng Quan Bao· 2025-10-30 18:29

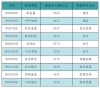

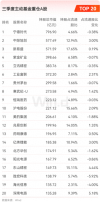

Core Insights - The A-share computing power industry chain is experiencing significant growth, with 143 out of 147 listed companies reporting profits in the first three quarters of the year, driven by the global AI wave and accelerated domestic infrastructure deployment [2][3] Group 1: Company Performance - Among the 143 companies, 118 achieved profitability, with 32 companies doubling their profits year-on-year [2] - Industrial Fulian emerged as the "profit king" with a net profit of 22.487 billion yuan, a year-on-year increase of 48.52% [2][10] - Zhongji Xuchuang reported a net profit of 7.132 billion yuan, marking a remarkable growth of 90.05% [2][10] - Han's Semiconductor turned a loss of 724 million yuan last year into a profit of 1.605 billion yuan, with revenue soaring by 2386.38% to 4.607 billion yuan [4][10] - New Yisheng's revenue reached 16.505 billion yuan, up 221.70%, with a net profit of 6.327 billion yuan, increasing by 284.38% [6][10] Group 2: Sector Analysis - The server sector is the main driver of growth, with Industrial Fulian's revenue reaching 243.172 billion yuan, a 42.81% increase, and a net profit of 10.373 billion yuan, up 62.04% [3][10] - The PCB industry is also witnessing growth, with Shenghong Technology's revenue increasing by 83.40% to 14.117 billion yuan and net profit soaring by 324.38% to 3.245 billion yuan [7][10] - The light module sector is experiencing a positive cycle of "technological breakthroughs, product volume, and performance realization," with Zhongji Xuchuang's revenue growing by 44.43% to 25.005 billion yuan [5][10] Group 3: Market Trends - The demand for AI computing power is expected to remain high, with light module companies like New Yisheng anticipating continued growth in 1.6T products [6][8] - The PCB industry is moving towards high-end products, driven by AI data center construction needs, with increased technical barriers and capital requirements [8]

公募三季报持仓洗牌:科技股“七雄”霸榜,茅台失宠,ST华通成黑马

Hua Xia Shi Bao· 2025-10-30 13:16

Core Viewpoint - The report highlights significant shifts in the holdings of actively managed equity funds in the third quarter of 2025, with a notable rise in technology stocks and a decline in traditional consumer stocks like Kweichow Moutai [3][4][6]. Group 1: Fund Holdings Overview - As of September 2025, the total assets under management in the public fund industry reached 35.85 trillion yuan, a quarter-on-quarter increase of 6.30% [3]. - The top three holdings of actively managed equity funds are dominated by technology companies, with CATL reclaiming the top position, surpassing Tencent Holdings [3][4]. - Kweichow Moutai's total market value held by active equity funds decreased to 29.958 billion yuan, down from 30.616 billion yuan in the previous quarter, dropping from third to seventh place among top holdings [3][6]. Group 2: Technology Sector Performance - The technology sector emerged as the primary focus for public fund investments, with seven out of the top ten holdings being technology-related companies [4]. - Notable performers include Xinyi Technology and Zhongji Xuchuang, both of which ranked among the top three heavyweights [4]. - The current market trend indicates a strong and sustained interest in technology stocks, driven by China's economic transformation towards a hard-tech model [4][5]. Group 3: Challenges in Traditional Consumer Sector - The traditional consumer sector, particularly the liquor industry, is facing significant challenges, with 59.7% of liquor companies reporting a decrease in operating profits [6][7]. - The white liquor market is undergoing a deep adjustment phase due to policy changes, consumption structure transformation, and intense competition [6][7]. - The overall sales volume in the liquor industry is expected to decline by over 20% year-on-year, reflecting macroeconomic fluctuations and slow recovery in consumer spending [7][8]. Group 4: Fund Manager Strategies - The top five stocks with increased holdings include Zhongji Xuchuang, Industrial Fulian, ST Huatuo, Dongshan Precision, and Hanwha Technology, all of which are technology companies [9][10]. - Conversely, the top stocks with reduced holdings include Shenghong Technology and Haiguang Information, with significant sell-offs attributed to internal management's actions [11]. - Despite CATL being the top holding, it also appears on the list of reduced holdings, indicating a complex strategy among institutional investors [11].

2025年三季报公募基金十大重仓股持仓分析

Huachuang Securities· 2025-10-30 12:50

Market Performance - Since July 2025, major indices have risen significantly, with the ChiNext 50, ChiNext Index, and Sci-Tech 50 increasing by over 45%[1] - The Shanghai Composite Index, CSI 300, CSI 500, CSI 1000, and CSI 2000 have risen by 15.79%, 19.20%, 24.10%, 17.67%, and 14.89% respectively[1] Fund Establishment and Holdings - A total of 90 equity-oriented active funds were established in Q3 2025, with a total share of 554.04 billion[2] - The average stock position of various types of equity-oriented active funds increased compared to Q2 2025[3] Industry Distribution - The industries with increased holdings of over 100 billion include electronics, communication, power equipment and new energy, computer, non-ferrous metals, machinery, pharmaceuticals, and media[4] - The electronics sector saw a holding increase of 5.17%, while communication increased by 3.95%[4] Individual Stock Distribution - The top five stocks with the largest increase in holdings are Zhongji Xuchuang, Xinyi Sheng, Industrial Fulian, CATL, and Cambricon[5] - The largest holdings in A-shares are CATL, Xinyi Sheng, Zhongji Xuchuang, Luxshare Precision, and Industrial Fulian[5] Large Fund Holdings Analysis - As of October 28, 2025, there are 34 equity-oriented active funds with holdings exceeding 100 billion, an increase of 10 from the previous quarter[6] - The stocks with the most significant changes in holdings among large funds include Zhongji Xuchuang, Xinyi Sheng, Luxshare Precision, CATL, and Industrial Fulian[6] Hong Kong Stock Holdings - The top six Hong Kong stocks held by funds in Q3 2025 include Tencent Holdings, Alibaba-W, SMIC, Innovent Biologics, Pop Mart, and Xiaomi Group-W, each with a market value exceeding 10 billion[7]

2025Q3公募基金及陆股通持仓分析:内外资成长仓位均历史性抬升

Huaan Securities· 2025-10-30 12:30

Group 1 - In Q3 2025, the total market value of public actively managed equity funds and Stock Connect holdings in A-shares significantly increased, with public equity funds holding A-shares worth 3.56 trillion, a substantial increase of 21.5% from the previous quarter, and Stock Connect holdings reaching 2.59 trillion, up 12.9% [5][18][124] - The overall position of public actively managed equity funds continued to rise, with an overall position of 85.77%, an increase of 1.31 percentage points from the previous quarter, and over 40% of funds now have a high position of over 90% [5][25][31] - The concentration of heavily held stocks in public funds has increased, with CR10, CR20, and CR50 concentration rising by 1.64, 2.21, and 1.68 percentage points respectively [5][107] Group 2 - Both public funds and foreign capital through Stock Connect showed a high degree of consensus in style selection, significantly increasing their holdings in the growth sector (domestic +8.68%, foreign +10.52%) while reducing their positions in the financial sector (domestic -4.07%, foreign -6.17%) and consumer sector (domestic -4.17%, foreign -3.62%) [6][130] - In the consumer sector, both domestic and foreign investors continued to significantly reduce their holdings in food and beverage (domestic -1.67%, foreign -2.08%), as well as in automobiles (domestic -1.54%, foreign -0.30%) and home appliances (domestic -0.89%, foreign -0.79%) [6][51] - In the growth sector, both domestic and foreign investors significantly increased their holdings in electronics (domestic +3.79%, foreign +4.86%) and electrical equipment (domestic +2.01%, foreign +4.87%) [6][65] Group 3 - The financial sector saw a significant reduction in holdings, with both domestic and foreign investors heavily reducing their positions in banks (domestic -3.96%, foreign -4.38%) [6][97] - In the cyclical sector, there was a high degree of consensus, with both domestic and foreign investors significantly increasing their holdings in non-ferrous metals (domestic +1.42%, foreign +1.21%) while reducing their positions in public utilities [6][75] - The overall position in the cyclical sector continued to decline slightly, with more than half of the industries being reduced, particularly in public utilities and transportation [6][76]

5.6万亿ETF撑起A股“稳定器”大旗?

Huan Qiu Lao Hu Cai Jing· 2025-10-30 11:23

Core Insights - The ETF market is experiencing explosive growth, with the total scale of non-money ETFs surpassing 5.6 trillion yuan and the number of products exceeding 1,300 as of the end of September [1][2] - The rapid growth of ETFs is significantly driven by policy support, particularly noted in 2024, where the scale increased by nearly 1.7 trillion yuan [2] - ETFs are playing a stabilizing role in the market, smoothing out volatility and providing liquidity during downturns, while also being a preferred tool for investors looking to "buy the dip" [1][6][7] ETF Market Growth - The ETF scale grew from 1 trillion yuan to 5 trillion yuan in less than six years, with a notable acceleration starting in 2020 [2] - By the end of Q3 2025, passive fund scale accounted for approximately 33.55% of the market, with ETFs making up 78% of passive funds [2][3] Fund Company Landscape - As of the latest data, there are 54 fund companies engaged in ETF business, with a high concentration of management scale among the top firms [3] - The top five non-money ETF management companies are 华夏基金, 易方达基金, 华泰柏瑞基金, 南方基金, and 嘉实基金, collectively managing over 25% of the total ETF scale [4] Market Stability Role - ETFs are seen as market stabilizers, helping to reduce volatility caused by large inflows and outflows of capital [6][7] - Institutional investors, such as 中央汇金, are using ETFs to stabilize the market, particularly focusing on broad-based ETFs [7] Individual Stock Impact - While ETFs help smooth market fluctuations, they can also contribute to significant price movements in individual stocks during rebalancing events [10][11] - For instance, the stock price of 寒武纪 experienced a notable decline due to ETF rebalancing, highlighting the dual role of ETFs in both stabilizing and amplifying market movements [11][12] Sector Trends - The trend of investing through ETFs in popular sectors is increasing, with significant inflows into themes like robotics and innovative pharmaceuticals [3][8] - The performance of individual stocks, such as 药捷安康, has been heavily influenced by their inclusion in ETF indices, leading to rapid price increases followed by sharp corrections [12]